Decode Digital Market has covered a lot of content on SEO so far, whether it be SEO Mistakes, Link Building methods, Guest Blogging.

Today, we will take a look at important SEO Glossary terms that SEOs toss around, leaving others around them confounded as if they are using the Dothraki language from “The Game Of Thrones”

You don’t need to feel that way anymore, this guide is at your rescue

Analytics is an integral part of SEO. Search Engine Optimization isn’t just doing On Page SEO, Off-Page SEO & Technical SEO. I am not saying that these won’t drive results. Rather these will only drive results but you have got to be strategic.

How can you be strategic in the absence of analytical data?

Tools like Google Analytics, SEMrush, Omniture and even Google Search Console help you create SEO Strategies that are backed by data. Data-Driven Marketing is the key to nailing your SEO Strategy.

Scrape in SEO means just like the name suggests; scraping data. There are tools to do that; scraping helps SEO with data extraction and data analysis to make informed decisions. A simple example of data scraping would be Wayback Machine.

On Wayback Machine you can scan your competitor’s website that you are using as a benchmark of standard and see the UX evolution that website has gone through over the years.

The Wayback Machine shows exactly how a certain website appeared back in 2008.

SEO is an acronym for Search Engine Optimization in this industry it’s shortly called SEO.

It’s a set of best practices adopted to make a website search engine friendly so that the website can potentially rank on the search engine, drive high traffic and achieve business goals like lead generation, ad revenue, affiliate conversions, and product sales.

Search Engine really is a potential market where trillions of search queries are performed by billions of users (potential customers) across the world.

SEM is an acronym that stands for Search Engine Marketing. Search Engine Marketing is paid advertisements on popular search engines like Google & Bing.

On Google Search Engine paid advertisements are run via Google AdWords. Using Google AdWords you can run search ads, responsive ads, display ads & also YouTube Ads.

With SEM, businesses manage to generate leads & drive sales. SEM is often leveraged for SEO campaigns as the two complements each other.

Link Juice is a term used in SEO for link building. Link Juice means the amount of value that a page would pass to another page or to another website altogether.

It is important to keep consideration for Link Juice as you go through with your SEO Campaigns because as an SEO you need to know whether the links that you are building or the pages that you are creating are passing link juice or not. The success of SEO campaigns depends on it.

Backlinks are the life and blood of SEO. Backlinks are the links we generate for our website on other websites.

Types of backlinks

- Citation backlinks

- Social Bookmark backlinks

- Editorial Backlink

- Web 2.0 backlinks

- Guest Blogging backlink AKA Contextual Backlink

Backlinks work the same way votes work for politicians. Would you vote a politician about whom nobody is talking about?

I wouldn’t either. That’s exactly how Google feels. Your website’s backlinks on other website is like vote bank that you build on ongoing basis. When Google these other sites and finds your website dofollow link; it passes the link value which benefits your website with higher rankings and more authority.

Anchor text is the text that we want to rank higher on Google. It contains the text that preferably has a high search volume.

What we do is build our website link on those anchor text on different websites; dofollow links are the ones that help with this.

For example, you are house cleaning service provider in Mumbai so obviously the keyphrase you would want to rank would be “house cleaning services in Mumbai” so you will build your website /house-cleaning-services link on other website over anchor text i.e “house cleaning services in mumbai”

Anchor texts are also branded; so if you want to leverage branding then you will use your brand name as an anchor text; it’s shown great results lately.

JavaScript is a programming language that makes plenty of conversations in SEO. SEOs refer to JavaScript as the devil it can slow down your website. An SEO with a good understanding of JavaScript can prevent the script from slowing down the site.

All the scripts that we add on the website, scripts like Google Analytics JS, Facebook Pixel Remarketing Code contains JavaScript.

Understanding that, it’s highly advisable to add these scripts via Google Tag Manager so that your website doesn’t take the load and that it is instead handled by the Google Tag Manager.

In the same way, website development & design adds a bunch of JavaScript code that isn’t inlined and that can again slow down the website. It is important to inline the JavaScript to prevent that from happening.

Keyword Stemming is a term in SEO that is related to keyword research. Keyword Stemming simply means to diversify your head keywords via modifications so that Google analyzes multiple user/search intents and after finding all these modification keywords in your website; your website stands a chance to rank for multiple keywords that are inter-related.

For example, your website sells house cleaning services so the synonyms for house cleaning services are home cleaning services, residential cleaning services you can diversify these modifications throughout the page by strategically distributing it across the title, headings, image alt attribute and body.

Citations occupy an integral place in Local SEO Campaigns. Citations simply mean submitting your local business information on Local Business Listing Sites. The three important pieces of information must be present in these citation sites.

Information like Name, Address & Phone Number. (NAP)

Some examples of Citation Sites are Yelp, Foursquare, Angieslist, TomTom, etc.

Some example of hyper-local citation sites are florida.bizhwy.com, southfloridareview.com, etc.

Citations matter a lot for Local SEO because Local Business Websites needs to rank in their locale like a home cleaning services provider in Florida needs to essentially rank in Florida. Google would find it more plausible to deliver that rank if websites in Florida are talking about that business.

LSI stands for Latent Semantic Indexing. LSI plays a very critical role in keyword research. It sets the groundwork for SEO campaigns to work upon.

The concept behind LSI is to add keywords that are conceptually related so that Google Algorithm can gain a deeper understanding.

It is important to add the conceptually related term to make Google understand the content. So if you are writing about SEO. It’s important that you add at places the full form of it’s abbreviation i.e search engine optimization including other keyphrases like “what is SEO” “how does seo work”

LSI keywords are an antidote to keyword stuffing.

Some good places to hunt LSI keywords are Google autosuggest, Google related searches, LSI Graph, Ubersuggest, AnswerThePublic

A Canonical Tag specifies the original page, as a best practice it is important to have canonical tag to have on important pages, especially pages that might have duplicates or in-website search strings could create temporary duplicate pages.

If there are two pages on a website with the same content then one of the pages will be considered as duplicate content which will affect the ranking of both the pages. Google’s Algorithm cannot distinguish between the two.

A canonical tag specifies what’s the original page and transfers the authority to the original page.

For example, Shopify stores can seemingly have a lot of duplicate content due to categorical search strings. A visitor can arrive at product landing page as com/products/the-product

OR

com/collections/thecollection/product/theproduct it will be the exact same product, which means same content but URLs are different. In this case the collection string page will have a canonical tag on com/product page

A canonical tag looks like this <link rel=”canonical” href=”https://www.example.com“>

On CMS like WordPress or Shopify among others this is taken care of via CMS itself. If your website is on static HTML/CSS then your developer is gonna have to make this changes on pages.

A Meta Tag is a tag on a web page that isn’t visible on the page content but it defines the content & context of the page.

Meta Tags are Title Tag, Description tag, Meta Keywords & Image Alt Attribute.

Usually the keyphrase for which you are trying to rank a page for are the keywords that you will incorporate in Meta Tags.

DoFollow is a directive that a link in a website carries. The directive is for search engine bots. With the DoFollow directive we are telling the search engine bots to follow the link and pass the authority.

DoFollow link was created to fight the UGC backlinks spam like comment backlinks. It was very easy to create backlinks with tactics like blog commenting and the likes. And if there’s no parameter for qualifying the worth of a backlink then pretty much everyone can rank well. Because of that, the DoFollow directive was created.

Here’s what a dofollow link looks like

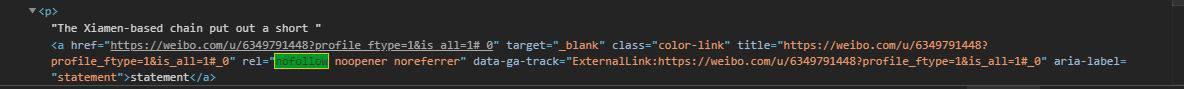

NoFollow is another link directive for search engine bots. As the name suggests, this directive tells search engine bots to not follow the link to its source.

UGC links like blog commenting fall into the NoFollow category. Webmasters can specifically choose which links in their website they want to make NoFollow.

Websites with tons of pages and huge traffic have to be very careful with DoFollow vs NoFollow ratio distribution. That’s why websites like Entrepreneur & Forbes often only provide NoFollow links to the contributor’s content.

People submitting content to such publications fishes for fame, authorship, branding and referral traffic.

Here’s what a nofollow link looks like

UGC is an acronym that stands for User Generated Content. UGC in Search Engine Optimization refers to content that users coming to your website can create as a means of engaging.

Blog Comments, for example, UGC Blog Comments were taken advantage of earlier to build links, to fight that spam nofollow was originated. A few months ago they have introduced certain nofollow link attributes like rel=nofollow UGC & rel=nofollow sponsored. (source)

A crawler is a program that visits websites and updates data in its database. Googlebot, for example, is a crawler that visits websites as it crawls, which then indexes the sites and delivers the appropriate rank to the website. All of that is managed via bots/spiders/crawlers.

There’s Bing Bot, Ahrefs Bot, SEMrush bots and many others that routinely perform the crawl to update its database.

Then there are manual crawlers like Screaming Frog which is a software that you can use to manually perform a crawl on a website to find meaningful data.

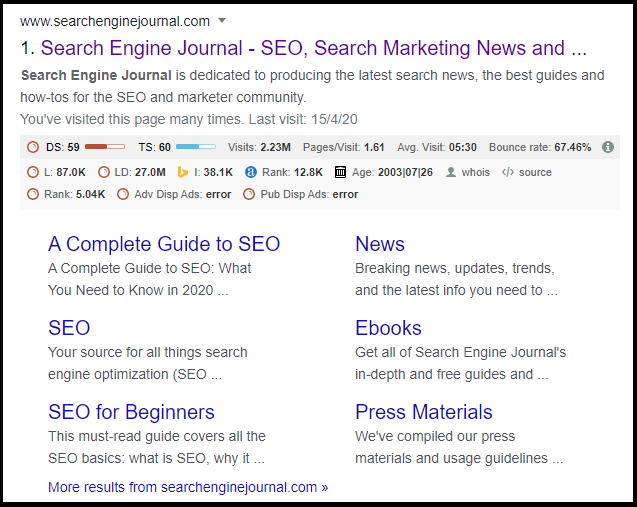

SERP is an acronym that stands for Search Engine Result Pages. As and when a query is performed on a search engine, the search algorithm looks into its database and produces results based on ranking factors, user’s website cookies, and location.

The results that are produced are called search engine result pages (SERP)

Link Bait is a technique used in Link Building to reverse engineer the process of Link Building. There are two ways link building takes place. Active Link Building & Passive Link Building. Link Bait is strategy used in passive link building.

In this technique, a content asset is developed that is the best content over the topic. For example, the topic is “Technical SEO Factors that matter in 2020 & Beyond” so your job is to build the best asset over this. It has to be the best blog post over that topic on the internet, length-wise, relevance wise, scope wise, user experience-wise.

Naturally good content ranks well, you can leverage ads to push such content if need be. Finding good content website publishers are bound to link to that article on their websites.

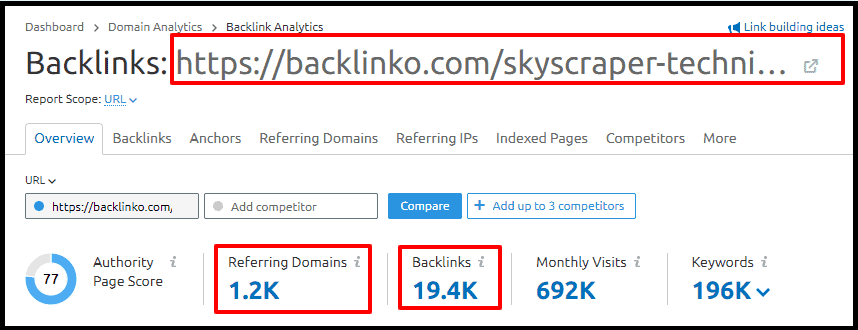

Brian Dean does it really well in his Backlinko blog, he publishes very comprehensively and up to date blog posts and as a result, he attracts a lot of natural inbound links.

Take Backlinko’s famous Skyscrapper technique article for example,

That article has attracted 19,400 backlinks to date and has 1200 referring domains to it.

Cloaking is a sneaky black hat technique some black hat SEO practitioners use to get results. In this technique, different content is served to search engine bots than what’s served to users. This technique is used to manipulate search engine algorithms to see what it needs to see to give you the rankings.

Because as you may have heard, you cannot create content for both users and bots at the same time. This technique proves that wrong.

However, that’s a black hat SEO strategy and I clearly don’t recommend it as it can have fatal consequences.

Keyword Density in SEO means how often a keyword is repeated throughout the page. As a rule of thumb the ideal keyword density should be 1-2%

To rank a web page for a specific search phrase/keyword the hack isn’t to repeat that keyword unnaturally and frequently, that’s called keyword stuffing. What you need to do is leverage LSI keywords & semantic keywords to strike the balance.

Checkout Wordstream’s guide on Keyword Density

Keyword Stuffing is a bad practice in search engine optimization where you stuff keyword wherever you find place even if it doesn’t make sense. Like X#$% is great appetizer, you need to try X#$%. X#$% is the future. X#$% just try it once.

Wouldn’t that annoy users? I bet it would. Google overall wants to amuse the users hence it will always focus on user experience.

Sandbox is a concept in Google SEO. According to experts which has been proven time & again that Google doesn’t trust a new website so it puts a new website in Sandbox period for 4-6 months. In that period the website doesn’t get any organic growth.

The growth is suppressed and once the website comes out of sandbox period it is then that the website sees the growth that it deserves. (source)

Text/HTML Ratio means how much text there is in proportion to the website code. As a rule of thumb there has to be more text i.e content in proportion to HTML i.e code.

Otherwise the web page is considered to be thin content.

Here’s a free tool to calculate text/code ratio.

Thin Content is a web page of a website with fairly low content. Every web page that is indexed and that you want to rank should have a decent amount of content. Google isn’t entirely sure of ranking thin content.

If we are talking about pages like cart, thank you pages. Then by default CMS’s noindex such pages otherwise you should manually so that your website appears neat to Google.

An algorithm is a process or set of rules to be followed in calculations or other problem-solving operations, especially by a computer.

Algorithm is a term SEOs toss around a lot when explaining things to their clients. Search Engines like Google are taken care of by an algorithm; Google hasn’t hired people to make the search engine work.

It’s the algorithm that makes it happen. The algorithm has defined criteria that gets the search engine to work every second.

E-A-T is an update that Google introduced last year. It’s not an algorithm update all together. Think of it as a ranking factor update.

E-A-T stands for Expertise, Authoritativeness and Trustworthiness. According to this update publishers are now required to have these elements to be able to rank well. E-A-T mostly impacts health & money niche sites.

Alt Attribute is an attribute for images, it’s an alt text to explain the context of the image. Because the algorithm cannot read the image and make sense out of it.

It is important to have an alt attribute for all the images on the web page with appropriate alt text. It helps a great deal with image SEO.

Here’s how the code of image alt attribute looks like. If your website is on a CMS like WordPress then there’s easy way of adding image alt text.

Artificial Intelligence as we know it is a program that can itself takes decisions without human intervention and gets things done for which it was made.

In SEO with Artificial Intelligence we are referring to Google Search Artificial Intelligence Algorithm. Google makes use of artificial intelligence in it’s algorithm to produce search results by digging into 200+ ranking factors and producing the search results based on additional factors like locality & interest.

A very robust and intelligent artificial intelligence program is required to handle this.

It was Google’s Rankbrain algorithm update that introduced the facet of artificial intelligence in the Google search algorithm. (source)

REGEX stands for regular expressions, it’s an important feature in Google Analytics. Using this feature in Google Analytics you find crucial data by segregating it into content groups and see to which product category you are getting more organic traffic or a particular landing page. Regex is also used for applying filters, segments and audience.

Website Authority is a very key concept in SEO that is used to assess, determine and identify the authority of a website. The authority of a website determines its likelihood of performing in SERPs organically.

Moz created the metric for it called “Domain Authority”

SEMrush calls it “Authority Score”

Ahrefs calls it “Domain Rating”

Website authority is determined taking into consideration various SEO factors like domain age, referring domains, quality of backlinks, UX, On Page factors, Technical SEO factor, quality of content, organic traffic, pageviews and more.

Black Hat is a concept in SEO that’s not talked about in good spirit and rightly so. Black Hat SEO practices are disliked by Google. They have clearly outlined in Google Webmaster Guidelines that websites practising black hat can face consequences like getting de indexed.

Some black hat SEO practices are

- Keyword Stuffing

- Doorway pages

- Cloaking

- PBN Backlinks

- Hidden content & links

- Alt Text over optimization

- Copied content

- Content Automation

Bounce rate means how long the visitors are staying at your web page upon arriving. The longer the time they spend the less the bounce rate vice-versa.

Bounce rate matters because it ultimately determines the ranking of that web page.

Suppose on your web page that’s about selling SEO services in Boston a visitor arrives and leaves right away then that signals Google that, that is a poor website and is giving poor user experience and so now Google will downrank your page even if your SEO service in Boston is better than everyone else.

A branded keyword is a keyword containing your brand name. For example, your brand name is Motorola so the branded keyword is Motorola. Now you have to make sure that you are sufficiently using the branded keyword in your link building campaigns as it will determine the branding of your business.

And Google just loves brands and likes to rank them higher.

Breadcrumb is a text path right below the header in a web page indicating the users where he is from the homepage of the website. It plays a good role user experience wise by keeping the website visitor alerted of where he is.

Breadcrumb also reflect on SERPS. When any page of a website other than homepage shows up on SERP.

The URL path in the slug shows differently, instead of word trail seperated by hyphen it shows in arrow. Like if we are talking about “SEO Guide 2020” in a website then without breadcrumb it will reflect as www.example.com/seo-guide-2020 whereas with breadcrumb it willl reflect as www.example.com>SEO instead of showing the slug URL path seperated by hyphen it will just show the category in which that article is.

It’s good from user’s perspective as he will know clearly what the article is about.

Here’s an example of breadcrumb on Google SERP by Decode Digital Market

Doing this on WordPress is simple as it can be achieved using Yoast Free version plugin.

Cache is a software component that stores data for future use. In SEO that data is stored in the browser’s cookie.

It plays a good role in speeding up the website. Because most people don’t clear their browser cache every single day, whereas some never do.

Using Leverage Browser Caching you can serve a cached version of your website to a returning visitor instead of serving a fresh copy every single time. That is the reason why the website loads up quickly the second time you open it.

AMP (Accelerated Mobile Pages) are also served on Google’s cache. That is why when you open an AMP page on your mobile you will see the URL not of the website but of Google.

Clickbait is a bad practice in SEO aimed towards getting higher click through rate and more traffic.

To do this, publishers will use a click-baity title tag that over-promises and barely delivers what it promises. The title tag is what appears in the SERP.

Other than that, they will use an anchor text link within the web page suggesting the resulting page is about something while it turns out to be something else entirely.

A huge drawback of click bait is higher bounce rate, because the resulting page was not what users wanted to see, it doesn’t satisfy their intent.

CTR stands for click through rate. It means the amount of people who clicked your website versus seeing it on Google SERP. The higher the CTR, the more relevant your page is, or let’s say the more optimized your page is.

To increase the CTR, you can optimize title tag & meta description to see the rise. Here are two instances.

You have searched for SEO Guide 2020 and there are 2 results; which one would you click

- SEO Guide 2020

- SEO Guide 2020 (Explained With Real Examples)

It is likely that most people might click the second example because it has something additional to offer and it’s more catchy.

Assuming 500 people saw these two and 410 chose to click the second result then that gives a CTR of 82% which is great. In the same example the other result gets the CTR of 18%

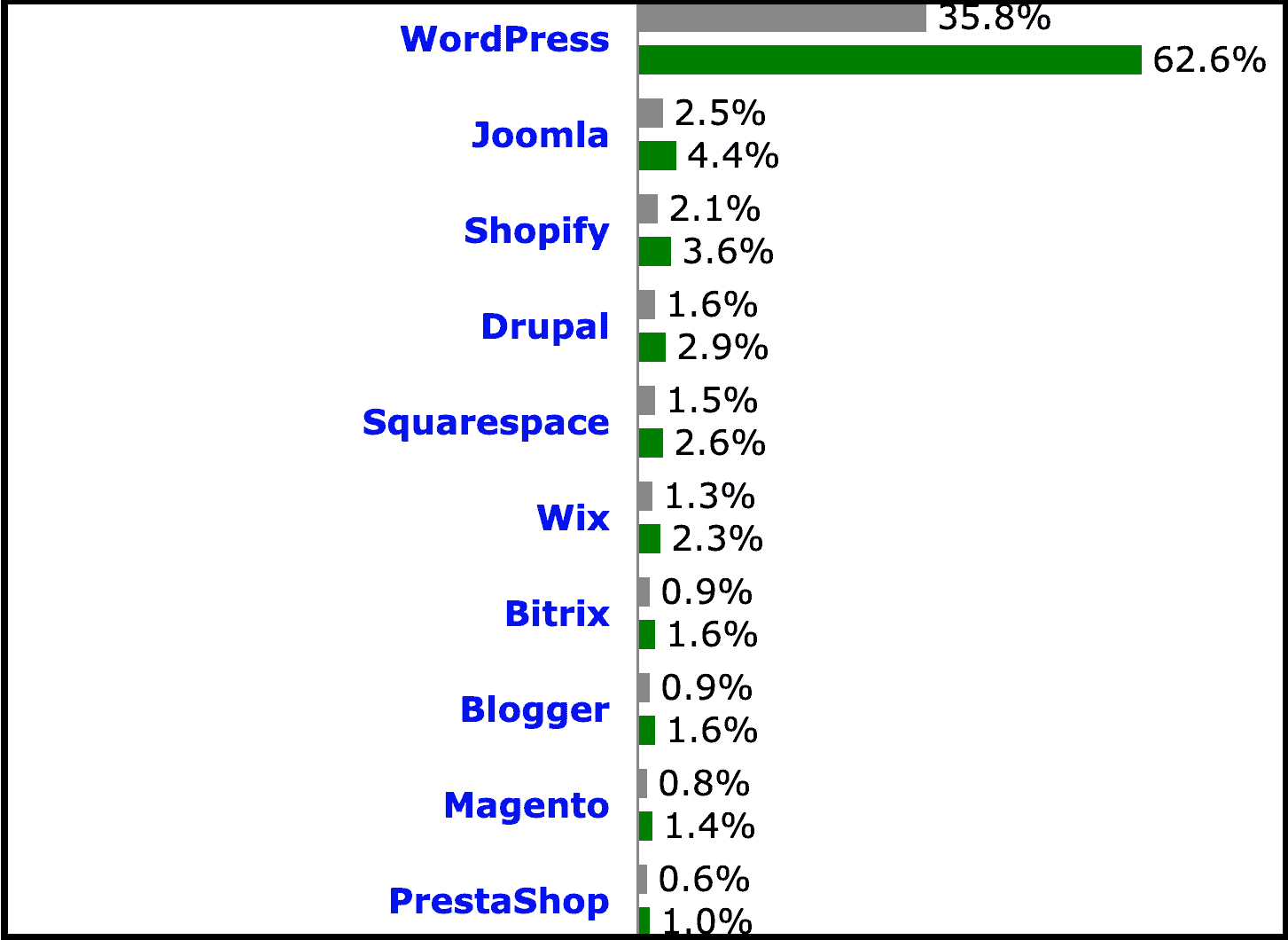

CMS stands for content management system. CMS plays a pivotal role in search engine optimization. A lot of websites that you will deal with are built on CMS instead of static HTML/CSS.

There are CMS like WordPress, Shopify, Squarespace, Magento, Prestashop, Wix and more.

To optimize a website you need proper understanding of the CMS on which the site is managed.

Checkout this CMS Market Share infographic by Kinsta.

The CMS that you choose should serve the industry of the business. For example if it’s E-Commerce then it can be Shopify, Magento & PrestaShop for rest it can be WordPress, Squarespace.

What is a conversion?

A conversion means when a visitor on your website completed a goal. The goal can result in you making money or not making money. The usual conversions are leads and sales.

Other conversions are a specific page session, product added to cart, average time spent on a particular page.

When several users complete a goal that’s when you get a goal conversion rate that is measured on the basis of people who completed the goal versus who didn’t.

For example, you have set a goal of average session duration in 2 minutes. Out of 1000 interactions, 50 completed the goal then the conversion rate will be 5%.

Conversion Rate Optimization comes into the picture as soon as you identify that the conversion rate is low. This is when you begin optimizing the conversion rate to get more conversion. This can be achieved by making tweaks to UX and content.

For example, you are not generating enough leads on the landing page with lead generation form. As you begin thinking why it occurred to you that you have put way too many fields which are annoying users to complete the form. So you reduced many fields by removing the unnecessary ones.

As a result now you have a +10% conversion rate than usual. It is important to keep optimizing the conversion rate so as to not miss out conversions that could have been yours.

Crawl Budget is a search bots crawling budget that it’s willing to spend on your site as it comes over to your website and begins the crawl.

A search engine crawler has only so much bandwidth to spare, and so it will not go through the trouble of crawling every single page in one go, it will crawl a few pages and then return again to finish crawling the remaining pages which can take very very long.

That is where Robots.txt optimization comes into play.

By optimizing your robots.txt and setting priority level in your sitemap you can instruct the search engine bot to crawl the prior pages first.

That is why if you take a look at robots of e-commerce websites that has tons and tons of products you will discover that their robots disallow accessing a lot of pages as they don’t want to waste crawlers budget on pages that don’t bring value.

Crawl errors are these horrible errors that are reflected in Google Search Console’s coverage report. It is important to resolve crawl errors as it prevents your landing pages from getting crawled and indexed.

Here’s a list of crawl errors you will encounter

- 404 crawl error

- Soft 404

- Alternate page with canonical error

- Crawl anomaly

- Noindex error

Disavow is a feature in Google Search Console that often is a last resort to be used with caution. One uses this feature if one has attracted backlinks from super-spammy sites.

Sites with high spam scores have illegal keywords. Getting backlinks from sites like this can penalize your website.

Using the disavow tool you can remove such backlinks and restore your organic health on Google.

Keyword Difficulty is a metric by SEMrush that determines the difficulty it will take to rank a certain keyword/keyphrase on Google with organic SEO keyword.

It is on a scale of 0-100. The accuracy of SEMrush’s keyword difficulty tool is very precise when compared with other tools predicting the difficulty level. Other tools like Ubersuggest, KWFinder and more.

Using advanced filters you can extract a list of keywords with higher search volume, low keyword difficulty in a particular niche.

Duplicate Content is SEO that has the same content on different pages, as a means to increase the amount of content some publishers may choose to copy the same content to different pages which search engine bots sees as duplicate content and they don’t like it obviously.

Sometimes duplicate content on a website is auto-generated due to parameters.

For example, in a Shopify store you can arrive at a products landing page directly following com/products/the-product or via collection parameter i.e com/collections/the-collection/the-product in this case it’s the same landing page which means search engine bot will see the two as duplicate content.

In this case the page that you want actually to rank, you will set that page as a canonical tag.

For example, you would want to rank the product landing page and not the collection leading up to the product landing page. So for the collection/product you will set the product landing page as canonical.

For a website engagement metrics suggests the engagement audience has with the landing page which incoming traffic, bounce rate, social shares and comments.

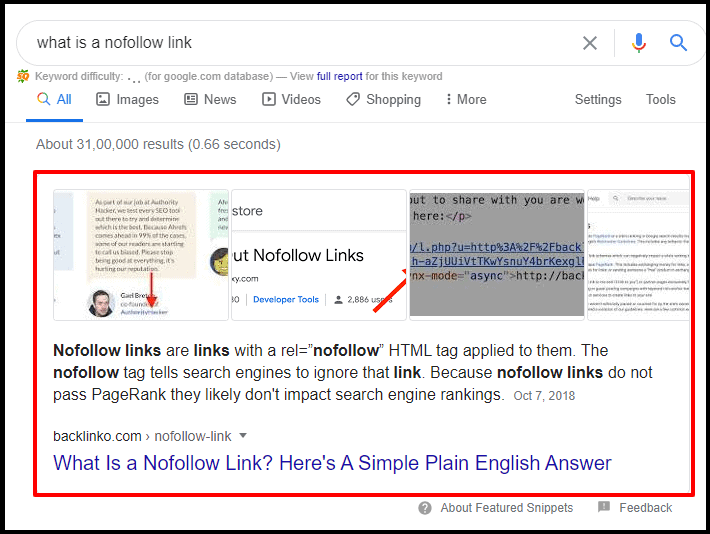

A featured snippet is #0 position search result the one that is fetched as a paragraph right before the #1 organic search result.

The result in the featured snippet gives more data than that of other listings. It gives data as a paragraph fetched from the page, it can also be a list or image.

YouTube videos are also ranking in search results.

The pro is that it’s good for branding and capturing attention and can sometimes lead to more CTR provided it doesn’t altogether answer the searchers query.

The con is that if it does answer the searchers query altogether then there’s no reason why the searcher would click your results and come to your website and that’s how you will lose traffic.

An example of featured snippet

Rich Results are the search results on Google with rich information like reviews/ratings, thumbnail images as well as rich cards.

To get results like these you need to implement appropriate schema markup. There are different types of schema markups like product schema, review schema, organization schema, people schema, article schema, rich cards and more.

Depending upon the page you have to choose the appropriate schema.

Here’s an example of a rich cards schema.

Schema as discussed above, it’s a markup language that Google reads to reflect rich results. Json-ld is the schema language that you need to use. There’s a free tool that you can use to effortlessly create the rich schema.

Here is the link: https://technicalseo.com/tools/schema-markup-generator/

Guest Blogging is a link building technique used to build links to a website. The process of guest blogging is such that you contact bloggers in your business niche via email and ask if you can contribute content to their website for free in exchange for a dofollow backlink.

Once you reach an agreement you then contribute a guest post with a dofollow backlink pointing back to your website and as a result you build a high quality backlink profile.

It’s a give and take proposition, you give high quality content to the website, they have to write one blog less and in exchange you receive a valuable backlink that will help you with ranking.

Indexability means how crawlable and indexable your website is, whether there are crawling and indexing errors preventing your website from getting indexed. Like maybe your web developer mistakenly noindexed money pages.

Keyword Cannibalization means when more web pages from your website competes for the same keywords as a result Google gets confused and denies ranking growth to both.

For example, you wrote an article on SEO Strategies and another on SEO Techniques where both articles are competing for “SEO Techniques” keyword so right there keyword cannibalization takes place.

The right thing to do is either take down one or two, consolidate the two and apply 301 redirect for the one that you take down directing to the one where you have consolidated.

Ahrefs has put together a very helpful guide on tackling & fixing keyword cannibalization check it out here

Link Building is an Off Page SEO Practice in Search Engine Optimization where you essentially build links to external websites for your own website that you want to rank. There are various link building techniques that helps you achieve your SEO goal. Link Building takes into account various metrics like dofollow links, nofollow links, links from top level domains.

Link Building for contextual anchor text, branded anchor text and more.

If you are entirely new to Link Building then you should check out Easy Link Building Methods Listicle

A Backlink profile is a combination of all the backlinks that you have built for your site that describes the diversity of top level domains & link attributes.

Top level domain backlinks like backlink on .edu, .org, .eu etc.

Link Attributes like dofollow & nofollow equally plays an important role.

If you are SEMrush user then there is an easier way to glance over the backlink profile of a website at once by simply going to backlinks report.

A sitemap is an xml language file that displays the sitemap of your website containing all the links of your website.

As a best practice, it is advisable to add a sitemap link in the footer so that when the search bot comes to crawl it can discover the sitemap file & perform crawl on the pages mentioned in the sitemap.

Robots.txt is a text file that is to be read by the search bot. Using robots.txt we let the search bot know which files he is allowed to access and which files we are not allowing it to access.

Usually files with confidential data concerning client or sensitive business information are prevented from being crawled. Such files are put into disallow.

Files like cart, account, login are put into disallow.

CMS like Shopify & Prestashop that are E-Commerce store builders, these CMS by default has robots.txt generators that will add disallow to all private customer confidential files like login, account, cart and more. Thereby making your job easier.

A long tail keyword consists of more than 2 keywords. Long Tail keywords are easier to rank than short-tail keywords. It’s a means to move towards ranking short-tail keywords that have huge search volume.

An example of long tail keyword is “Digital Marketing Agency for Small Business”

Take a look at this graph put together by SEMrush to get a better idea

On Page SEO is a set of all activities we undertake on the website to make it search engine friendly. This involves activities like updating meta tags i.e Meta Title, Meta Description, Meta Keywords, Meta Robots, Image Alt Attributes and Internal Linking.

Other On Page elements include optimizing heading tags & content.

PBN stands for Private Blog Network; it’s a black hat link building strategy that Google dislikes. The three predictors of a PBN website are identical/familiar UX, high domain authority, same content or no content (and content that is plagiarized)

This might make you wonder how come then they have high domain authority. And the answer is that they buy expired domains having good domain authority already and then they will use these domains to build links for their money sites.

There are Facebook Groups where people buy PBN backlinks. But I would never recommend this strategy. It is a shortcut and can lead to penalization of your website by Google.

Ranking factors/ ranking signals are some factors that determine the rankings a website will get on search engines like Google. According to SEO Experts there are +200 ranking factors that Google takes into account for ranking a website.

Ranking factors like meta tags, E-A-T, BERT, social signals, backlinks and so many more are taken into account for ranking a website.

If you are interested in finding out what these 200+ Google’s Ranking Factors are then check out Backlinko’s killer guide

A redirect in SEO means 301 Redirect & 302 Redirect.

301 Redirect means the web page is permanently moved to a different URL and now the search engine will pass the ranking of the old page to the new one.

If the old page is still ranking on Google and people click that result they will be redirected to the new page.

This is helpful when you are changing the domain name and there are tons of pages on existing websites.

302 Redirect on the other hand means temporarily moved, which means that search engines will not pass the ranking signals to the temporarily moved page.

301 & 302 redirects are applied using htaccess file in your FTP or if you are a WordPress user then the same can be achieved via Yoast SEO plugin.

Sitelinks are additional links that appear right below the main URL in SERP. These additional links take you to specific pages. Sitelink extension can increase CTR to different pages of the same domain.

Sitelinks are awarded by Google based on which links are shared more and have more backlinks based on that you will receive your sitelink extensions.

An example:

An SSL Certificate is what it takes to get HTTPS for your website and it does affect the SEO of your website. Sometimes when you share an http URL and it’s open some browsers will display a warning message stating that this isn’t a safe connection.

Having HTTPS is furthermore important to websites where users enter their confidential data, websites dealing with E-Commerce, Bank & Insurance websites.

Some hosting providers provide SSL Certificates for free in their plan, otherwise you have to get it from a third party.

Cloudflare is a trustworthy SSL Certificate provider, plus it’s free.

A subdomain is a domain that is part of a larger domain. For example, abc.wordpress.com is a subdomain of the main domain that is wordpress.com

Subdomains are used in Web 2.0 link building as the free subdomains from platforms like Blogger, WordPress, Strikingly, Weebly and more are used for that.

Subdomains are also used in SEO for SaaS websites and websites with more content. The subdomains are used for different tasks, like a subdomain would be used for blogs i.e blog.subdomain.com the authority is shared and transferred between the website and subdomains.

Subdirectory is what falls under the main domain itself in the URL path. It’s a good way to compartmentalize the information available in the website.

For example, there is a website selling SaaS services, for such website the ideal subdirectory paths can be

www.example.com/solutions/the-solution

www.example.com/products/the-product

www.example.com/blog/the-amazing-ninja-guide

This is the way you segregate content using subdirectories, in these examples “solutions”,”products”,”blog” these are the subdirectories.

These subdirectories build their own authority with time, content, internal linking and link building efforts which complements the domain overall.

URL Parameters are query strings that help with tracking the link shared. For example, using Google Campaign URL Builder you can build UTM Parameter links and track the link with campaign name in Google Analytics.

Using Campaign URL Builder you can track Google My Business link clicks, social media link clicks, social media ad campaign link clicks, email marketing campaign link clicks and more.

Search Engine Rankings is a term used to say the ranking of a web page on search engine for the said keyword.

70. Internal Links

Internal Links in SEO means a practice of linking the web pages internally so that when the search engine crawler crawls the web pages it can find the the other pages and understand the relationship between each other this helps a great deal with topical authority establishing and lastly this helps you to get your pages indexed faster.

Recommened: How Google Search Console can help you improve internal linking?

71. Outbound Links

The outbound link means a link in the web page to an external website and not your own web pages.

Outbound Linking is encouraged so as to point users and bot to credible sources; especially when you are citing facts and stats. For example, if you say that X number of people in the U.S. uses bing then you need to add an outbound link that users can go to confirm the stat.

72. Link Farm

Link Farms are a network of websites that are built to link to each other or collectively link to the money site so as to boost the PageRank of the target website; this is a black hat tactic and Google hates it.

73. HTTP Status Code

An HTTP status code is a server response to a browser’s request.

There are various HTTP Status response codes like 301, 302, 400, 307, 523, 500, 200 & more.

It is a best practice to aim for a 200 Status code. A 200 status code makes the page available for users and bot just the way you built it.

74. Natural Links

Natural Links are backlinks from external websites that you earn without making a conscious effort to build them in any way.

One of the best ways to earn natural backlinks is to create content that adds value to the users.

75. Broken Link

A broken link is a link that will lead you to a broken 404 page.

Google does not pass any PageRank from the external backlink to a 404 page.

It is a good practice to reclaim broken backlinks by recreating the page that went broken or mailing the publisher site to update link on their page by substituting the broken backlink with an updated one.

Kunjal Chawhan founder of Decode Digital Market, a Digital Marketer by profession, and a Digital Marketing Niche Blogger by passion, here to share my knowledge